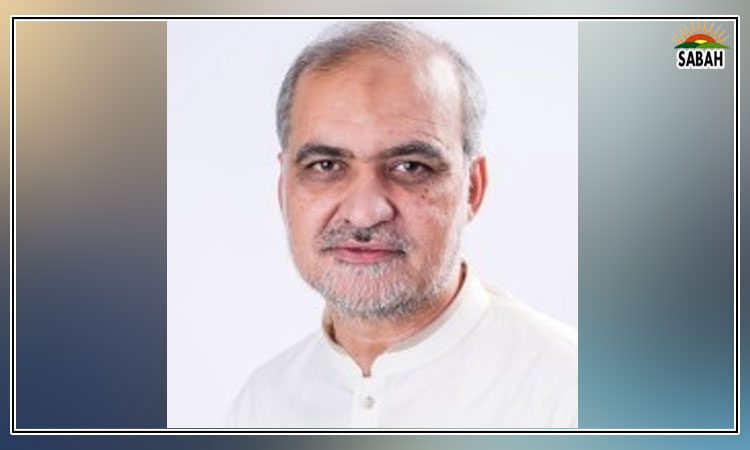

AI: the primacy of machine?…Dr Zafar Khan

As modern technologies in autonomous and semi-autonomous forms are embedded in the deterrent forces, many of the security analysts are raising concerns whether or not to keep humans in the loop. Many leading and rising powers are seriously considering making use of emerging technologies such as Artificial Intelligent (AI) and taking the human out of the loop. Extraordinary advances in the field of quantum computer and semi-conductors could change the course of warfare and the side in possession of such technological imperatives could overcome the stronger adversaries at least in the domain of conventional warfare. The machine learning and robotic world may turn this into reality where machines may function faster without human association.

Robert Mazzolin, one of the experts focusing on AI technologies, argues that AI now exceeds our performance in many activities once held to be too complex for any machine to master. This reflects that a time, not in the foreseeable future, may arrive where machines could dominate human beings without necessarily associating them. The preeminence or the primacy of machine and thereby the autonomous weapons in the case of evolving military strategies could eventually take over the humans with broader implications for states military strategies and their strategic relationship and rivalries against each other. However, AI technologies may confront intuitive and cognitive challenges that only human minds may have the ability to tackle in accordance with the changing battlefield environment.

In this context, Mazzolin says that While AI often has the edge on humans in speed, efficiency and accuracy, its inability to think contextually and its tendency to fail catastrophically when presented with novel situations make many reticent to allow the technology to operate free of human oversight. On the one hand, AI could uphold the advantages of accuracy, speed and lethality needed for states to quickly undermine the potential adversaries capabilities by achieving the military capability in the warfare domain; but on the other hand, emerging technologies in the form of AI with autonomous capabilities will have serious concerns for not keeping the human in loop with dire tactical and strategic consequences between the rival states more especially between the nuclear rivals in possession of such technologies. There is a danger that emerging technologies in the form of AI and autonomous weapons could eventually outmaneuver humans for dire military consequences. There could be accidental wars, unnecessary collateral damage and the rule of the machine to carry out the military operations the way the autonomous machine desires.

It may be correct to argue that human competing pursuit of AI technology by great and rising powers, as well as by non-state entities, promotes strategic competition, distrust and global instability. In their more recent research contribution, Cameron Hunter and Bleddyn E Bowen argue that the nature of war itself prevents narrow AI from performing, understanding, advising and explaining command decisions in strategy and tactics in any reliable fashion. This is because war requires abductive logical reasoning (which AI cannot do) rather than inductive logic (which it can), to comprehend and execute command decisions. These authors state that AI will in fact remain a halfwit tactician as well as a moron strategist because tactics is also the thinking part of warfare and requires the same kind of logic as strategy and politics. Being good at Chess does not make an AI good at devising a plan to storm a redoubt. Therefore, they conclude with policy implications for the government that, pouring money into narrow AI with the hope of producing genius machine commanders is folly, ending up with something more like the modern Major-General of The Pirates of Penzance. Perhaps, it is for the best that AIs restrict themselves to a nice game of Chess.

Courtesy The Express Tribune